Background:

Graph Theory was among my favorite topics during my undergraduate studies, and in Fall 2016, I managed to find research that would build on my interests in that area as well as leverage the Systems background I had (from one of concentration areas: Devices).

I joined Dr. Oded Green that term, and we began work immediately on Streaming Graphs (also known as Dynamic Graphs). These are graphs where the Nodes $V$ and Edges $E$ change over time.

Problem: Betweenness Centrality on Streaming Graphs

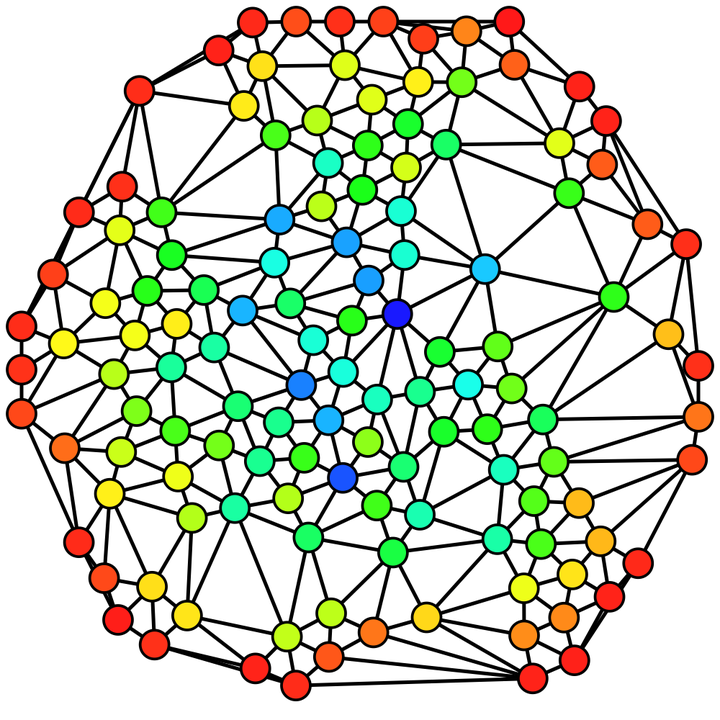

Betweenness Centrality(BC) is a measure of a node’s centrality in a graph based on it’s presence in shortest paths.

Think of it as the hub that connects many different transportation lines. The more dependent other transportation lines are on a given hub, the more central that hub is to the network.

On a static graph, we can compute the BC values for each node pretty easily (in terms of time complexity). However, real-world graphs like Facebook’s Social Network Graph contain hundreds of millions of nodes and billions of edges. If we wanted up-to-date BC values for graphs of this scale, re-computing BC each time a node/edge is inserted or delete becomes infeasible.

So that’s our task: Given a graph and then a sequence of insertions/deletions, can we accurately compute the BC values without recomputing on the entire graph?

Approach:

We created a framework called cuStinger (a portmanteau of Cuda and Stinger), which served as the backbone of our adventure in getting Streaming BC going.

Using this framework (which saved us largely from writing repetitive Cuda code), we implemented the algorithm as described here.

Challenges:

Since we were using Nvidia GPUs and Cuda under the hood, we had various challenges with race conditions, mutex locks, and validating results.

Results:

By the end of that year, we managed to have a running version that handled most edge cases of streaming graphs. There were some we hadn’t implemented yet when I left the lab.

A few weeks later, the lab moved onto a new framework (developed in-house with Oded and his lab), Hornet.

Lessons Learned

- Parallel Computation comes with some challenges, be ready

- Advances in GPU programming are opening doors to many new areas ripe for exploration

- ALWAYS write tests to validate results (we compared our Streaming BC results to our Static BC at each insertion/deletion)

Tools Used

- C/C++

- Cuda

- cuStinger framework